An Environmental History of AI

Laura Stark, 2024-25 RPW Center Faculty Fellow. This year’s group is exploring the theme of Emerging Technologies in Human Context: Past, Present, and Future

AI has emerged as a massive technical and scientific innovation in the past thirty years, to the extent that historians now treat AI as a driving force of history—on the order of economic, political, and social factors—for any event after 1980.(1) Over the same time period, public awareness of anthropogenic climate change has gained force globally.(2) These two trends—development of a stunning innovation and growing awareness of a catastrophic problem—became linked in efforts to solve the climate crisis with AI. From the United Nation’s AI4ClimateAction Initiative to Google’s Green Light Project designed to reduce car emissions from idling at stop lights, AI has been recruited by researchers, policymakers, and pundits alike as the great hope for planetary survival.(3)

AI has emerged as a massive technical and scientific innovation in the past thirty years, to the extent that historians now treat AI as a driving force of history—on the order of economic, political, and social factors—for any event after 1980.(1) Over the same time period, public awareness of anthropogenic climate change has gained force globally.(2) These two trends—development of a stunning innovation and growing awareness of a catastrophic problem—became linked in efforts to solve the climate crisis with AI. From the United Nation’s AI4ClimateAction Initiative to Google’s Green Light Project designed to reduce car emissions from idling at stop lights, AI has been recruited by researchers, policymakers, and pundits alike as the great hope for planetary survival.(3)

Yet, critics observe, this story is a classic case of a technofix: climate concerns may be addressed through AI, but such efforts often leave in place the underlying growth economy—unsustainable, extractive, and unequally experienced—that is driving the climate crisis. The paradox is that AI itself has exacted enormous environmental costs, even as it is undoubtedly an essential tool for climate scientists to mitigate our global climate emergency.

It’s far from obvious that researchers, under banner of value-neutral science, would emerge as major political force that they are today. And certainly not all scientists are. Far from disinterested parties, however, a critical mass of scientists since the late 1960s have emerged as political powerhouses, inspiring political movements and laboring for change.

The Movement for Green AI is a case in point. This project aims to understand the patterned, structural conditions that enabled and constrained data scientists’ work towards liberatory ends. What structures, networks, and ideologies have supported technologists who have enacted transformative politics through their work? How have scientists criticized their tools without undermining their authority? How have they directed attention, resources, and research to promote ecological care rather than newer, better, faster, and more profitable technologies? How can meaningful mobilization in the sciences be distinguished from green washing and industry co-option?

Insights into the history and future of the Movement for Green AI address Vanderbilt’s commitments to fostering critical data literacy and carbon neutrality by 2050. With these commitments and a spirit of inquiring it is possible to explore how academics, students, activists, and tech users can think critically and live responsibly in the age of AI.

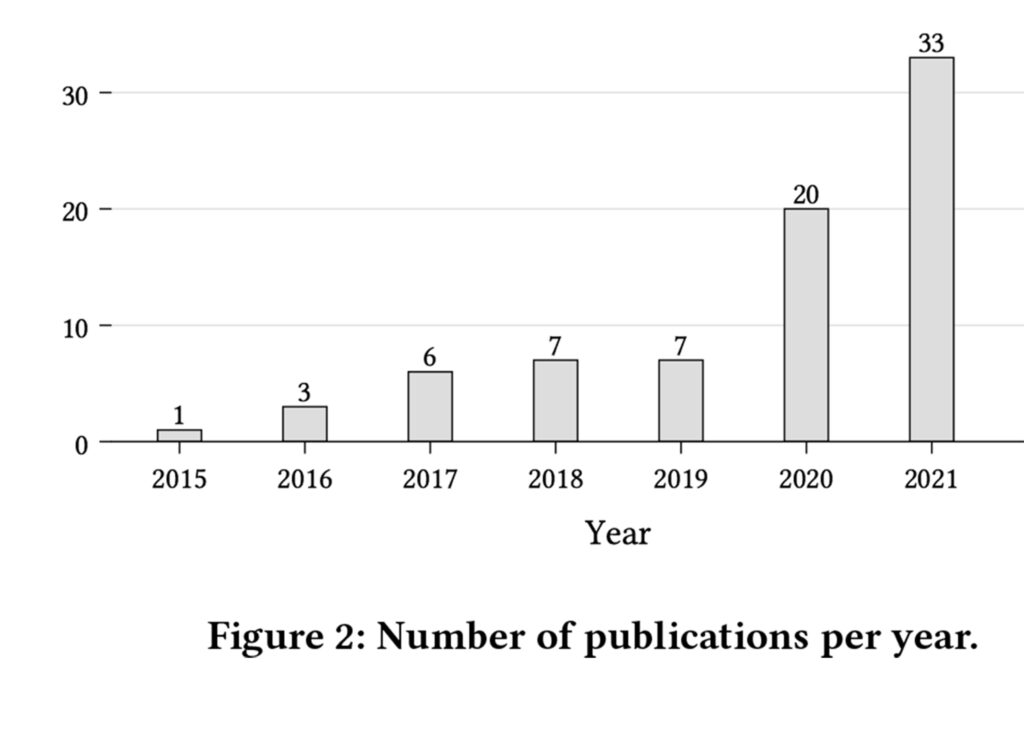

Graph 1: Number of computer science articles on the topic “Green AI” per year published in major journals and conference proceedings. From Verdecchia et al. “A Systematic Review of Green AI.” arXiv, May 5, 2023. https://doi.org/10.48550/arXiv.2301.11047.

1 Matthew L. Jones, “AI in History,” The American Historical Review 128, no. 3 (September 1, 2023): 1360–67, https://doi.org/10.1093/ahr/rhad361. 2 Naomi Oreskes, Merchants of Doubt: How a Handful of Scientists Obscured the Truth on Issues from Tobacco Smoke to Global Warming, 1st U.S. ed (New York: Bloomsbury Press, 2010). 3 “Harnessing AI for Better Urban Mobility: A Deep Dive into Google’s Project Green Light,” Medium, October 18, 2023, https://medium.com/@ai.agenda/harnessing-ai-for-better-urban-mobility-a-deep-dive-into-googles-project-green-light-61a41809a1b7; “#AI4ClimateAction,” https://unfccc.int/ttclear/artificial_intelligence.

Laura Stark is a historical sociologist of science and medicine, and Associate Professor at Vanderbilt University. Her work has focused on the political economy of “human subjects” research with attention to law, labor, and race. Her recent work has broadened to include the data sciences and she is currently researching the data and environmental inputs and impacts of generative AI. Stark is the author of Behind Closed Doors: IRBs and the Making of Ethical Research (UP Chicago, 2012) and The Normals: A People’s History (UP Chicago, under contract). Her latest project is An Environmental History of AI. Stark’s commentaries and book criticism have appeared in The Lancet, New England Journal of Medicine, Science, The New Republic,The LA Times, and other venues. www.laura-stark.com

Laura Stark is a historical sociologist of science and medicine, and Associate Professor at Vanderbilt University. Her work has focused on the political economy of “human subjects” research with attention to law, labor, and race. Her recent work has broadened to include the data sciences and she is currently researching the data and environmental inputs and impacts of generative AI. Stark is the author of Behind Closed Doors: IRBs and the Making of Ethical Research (UP Chicago, 2012) and The Normals: A People’s History (UP Chicago, under contract). Her latest project is An Environmental History of AI. Stark’s commentaries and book criticism have appeared in The Lancet, New England Journal of Medicine, Science, The New Republic,The LA Times, and other venues. www.laura-stark.com